Why do I slam the term "megapixel"?

Simply put, the size of the pixel is an important part of the chip

construction, as important (I would argue) as the pixel count (the

megapixels).

Think sound recording, and signal to noise ratio. A bigger pixel

gives you more information. Here's how I understand it working…

thanks to a great explanation by David O'Brien (again).

The pixel acts as a light sensor, but it does not generate a voltage

by itself, it is actually a phototransistor. That is, it is a switch

that basically adjusts its conductivity in response to the amount of

light hitting it. A photodiode does the same thing, but can't handle

as much voltage, and a photo-voltaic cell (which, all by itself makes

current) can't generate much of anything at the size we need. (Think

sensitivity and ISO here…)

So, you feed this switch a current, and depending on how much light is

hitting it, you get current out. A bigger switch will handle more

current. This is how you get sensitivity to light… if you have a

little tiny range of current you can feed this thing, you have a short

dynamic range, right? Feed a switch a ton of current and you get

bigger dynamic range. The 6-megapixel Phillips CCD in the Leaf, etc.

cameras had a 12 micron pixel, and was the size of a 35mm frame. The

Canon EOS 1DS has 11MP, a 35mm-frame sized chip, and the pixel is an

8.8 micron pitch (on a chip).

Speaking of the CMOS chip, here's the thing on that… We're feeding

current to a switch, right? Think of a garden hose going to a valve.

If the valve is tight we're going to get most of what we feed the

switch back out, so there will be a nice, linear response to our valve

"opening". If the valve is one of those cheap plastic things, then

it's going to blow water all over the place, and that is exactly what

a CMOS chip is… a cheap chip that is easy to make, but leaks current

all over the place. It works fine when you feed it current from a

little tiny pixel in your cell-phone camera, but when you make it

bigger and feed it more current you start popping leaks, and thus,

lose a lot of the advantage of the CMOS in the first place.

The irony of the CMOS process is that the first and best solution to

fixing the leakage problem is to increase the quality of the silicon

in the chip, which then increases the price of the chip, which makes

it less attractive from the get-go.

That all said, they have learned a lot about how to squeeze the last

little bit of juice out of a tiny pixel. (One of the most interesting

strategies is to start processing the information right at the pixel…

you get access to the current pixel-by-pixel, and can skip some of the

leaks). More pixels does, indeed, make a higher resolution image, but

you have to take into account the actual size of the chip, too… but

if you do, then you can compare two cameras based on magapixels. If

the chip is the same size, and one is a 6mp chip, the other is a 10mp

chip, then the 10mp is going to have more resolution.

You can't assume, though, that is is a better file, because you don't

know how good they are a processing that for a good signal/noise

ration. The best yardstick is still the price. You want to go fast,

you gotta pay the money, but obvioulsy, a 10mp point and shoot with a

tiny chip is not going to perform like a 10mp with a chip the size of

a 35mm frame.

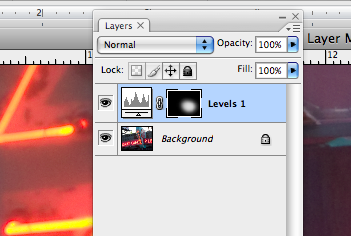

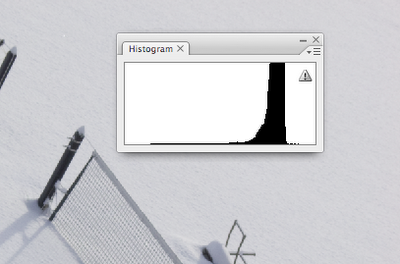

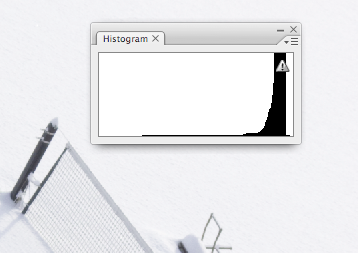

To close the loop, here, now you can see where the bit-depth of the

chip comes from. Remember, when you convert an analog value (in this

case your current) to digital, in 8-bit you get 255 possible values.

In 16-bit conversions you get 65,535 values. That comes right from

the amount of information we are getting from our little valve.

If you can feed that valve a ton of current, you can get a ton of

current out, and get a ton of information from it. If you have an

itsy bitsy valve, you can only feed it a small current, and you only

get a small range of valuse to work with. This is why a little bitty

1.5 micron pixel can only give you 8-bit files, it only has 255 (or

less) values available to it, and a 12 micron pixel can give you

65,535 values, or, a 16-bit digital file.

You can see this throughout the range of digital cameras… where the

marketing guys make the technical file information available, you can

see that some digital cameras have 12-bit RAW files, some 14-bit, and

some have a true 16-bit file. Generally that specificaltion will be

closely tied to the price of the camera.

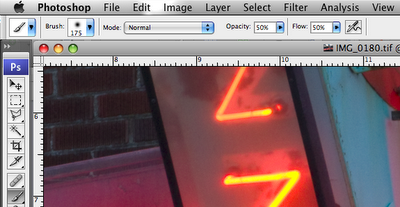

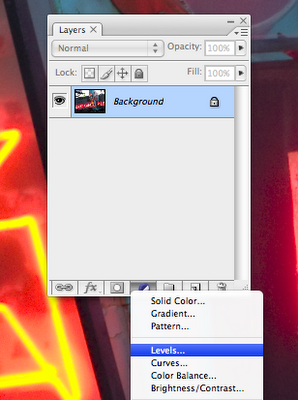

You also get to see how that depth will really determine the quality

of the file… you can process a 12-bit file up to 16-bit in Adobe

Camera RAW, but if you don't have that volume of information there in

the first place, it is not any more real information than the original

12-bits.

Labels: Geekzone, Megapixel